//

Between Microsoft Build and Google I/O, there are probably more people saying “AI” this week than any previous week in history. But the AI those companies deploy tends to live off in a cloud somewhere — XNOR puts it on devices that may not even be capable of an internet connection. The startup has just pulled in $12 million to continue its pursuit of bringing AI to the edge.

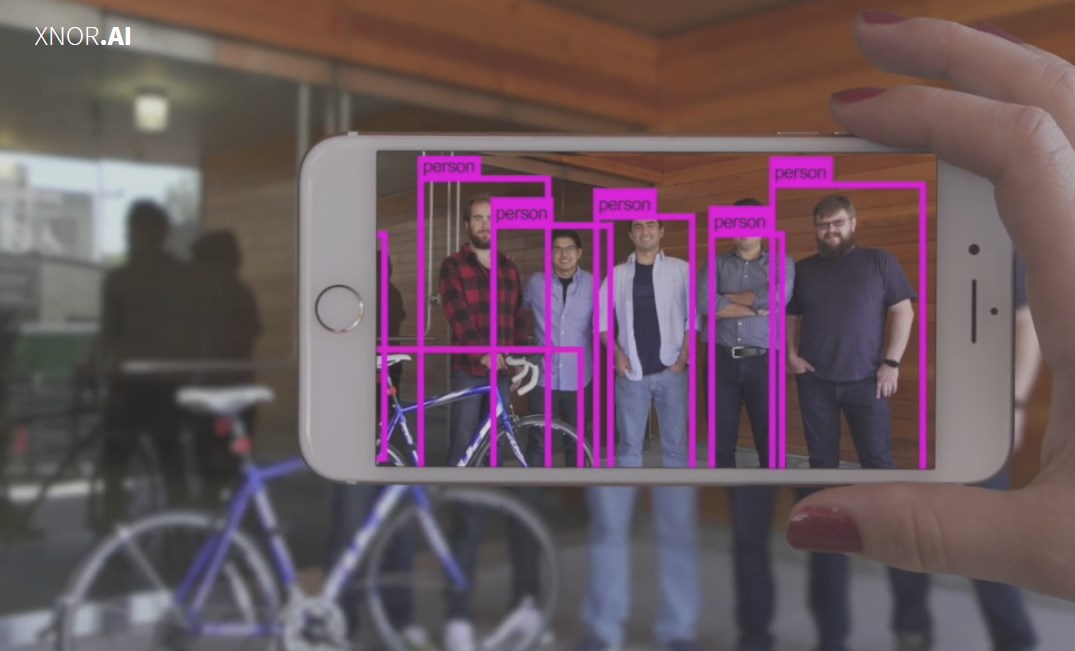

I wrote about the company when it spun off of Seattle-based, Paul Allen-backed AI2; its product is essentially a proprietary method of rendering machine learning models in terms of operations that can be performed quickly by nearly any processor. The speed, memory and power savings are huge, enabling devices with bargain-bin CPUs to perform serious tasks like real-time object recognition and tracking that normally take serious processing chops to achieve.

Since its debut it took $2.6 million in seed funding and has now filled up its A round, led by Madrona Venture Group, along with NGP Capital, Autotech Ventures and Catapult Ventures.

“AI has done great,” co-founder Ali Farhadi told me, “but for it to become revolutionary it needs to scale beyond where it is right now.”

The fundamental problem, he said, is that AI is too expensive — both in terms of processing time and in money required.

Nearly all major “AI” products do their magic by means of huge banks of computers in the cloud. You send your image or voice snippet or whatever, it does the processing with a machine learning model hosted in some data center, then sends the results back.

For a lot of stuff, that’s fine. It’s okay if Alexa responds in a second or two, or if your images get enhanced with metadata over a period of hours while you’re not paying attention. But if you need a result not just in a second, but in a hundredth of a second, there’s no time for the cloud. And increasingly, there’s no need.

For a lot of stuff, that’s fine. It’s okay if Alexa responds in a second or two, or if your images get enhanced with metadata over a period of hours while you’re not paying attention. But if you need a result not just in a second, but in a hundredth of a second, there’s no time for the cloud. And increasingly, there’s no need.

XNOR’s technique allows things like computer vision and voice recognition to be stored and run on devices with extremely limited processing power and RAM. And we’re talking Raspberry Pi Zero here, not just like an older iPhone.

If you wanted to have a camera or smart home type device in every room of your home, monitoring for voices, responding to commands, sending its video feed in to watch for unauthorized visitors or emergency situations — that constant pipe to the cloud starts getting crowded real fast. Better not to send it at all.

This has the pleasant byproduct of not requiring what might be personal data to some cloud server, where you have to trust that it won’t be stored or used against your will. If the data is processed entirely on the device, it’s never shared with third parties. That’s an increasingly attractive proposition.

Developing a model for edge computing isn’t cheap, though. Although AI developers are multiplying, comparatively few are trying to run on resource-limited devices like old phones or cheap security cameras.

XNOR’s model lets a developer or manufacturer plug in a few basic attributes and get a model pre-trained for their needs.

Say you’re the cheap security camera maker; you need to recognize people and pets and fires, but not cars or boats or plants, you’re using such and such ARM core and camera and you need to render at five frames per second but only have 128 MB of RAM to work with. Ding — here’s your model.

Or say you’re a parking lot company and you need to recognize empty spots, license plates and people lurking suspiciously. You’ve got such and such a setup. Ding — here’s your model.

These AI agents can be dropped into various code bases fairly easily and never need to phone home or have their data audited or updated, they’ll just run like greased lightning on the platform. Farhadi told me they’ve established the most common use cases and devices through research and feedback, and many customers should be able to grab an “off the shelf” model just like that. That’s Phase 1, as he called it, and should be launching this fall.

Phase 2 (in early 2019) will allow for more customization, so for example if your parking lot model becomes a police parking lot model and needs to recognize a specific set of cars and people, or you’re using proprietary hardware not on the list. New models will be able to be trained up on demand.

And Phase 3 is taking models that normally run on cloud infrastructure and adapting and “XNORifying” them for edge deployment. No timeline on that one.

Although the technology lends itself in some ways to the needs of self-driving cars, Farhadi told me they aren’t going after that sector — yet. It’s still essentially in the prototype phase, he said, and creators of autonomous vehicles are currently trying to prove the idea works fundamentally, not trying to optimize and deliver it at lower cost.

Edge-based AI models will surely be increasingly important as the efficiency of algorithms improves, the power of devices rises and the demand for quick-turnaround applications grows. XNOR seems to be among the vanguard in this emerging area of the field, but you can almost certainly expect competition to expand along with the market.

from Startups – TechCrunch https://ift.tt/2jHtF1q